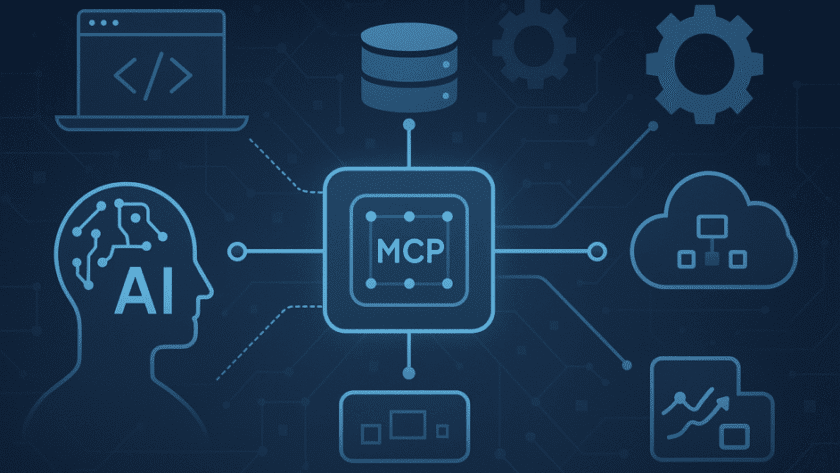

As synthetic intelligence (AI) continues to realize significance throughout industries, the necessity for integration between AI fashions, information sources, and instruments has turn out to be more and more necessary. To deal with this want, the Mannequin Context Protocol (MCP) has emerged as an important framework for standardizing AI connectivity. This protocol permits AI fashions,…