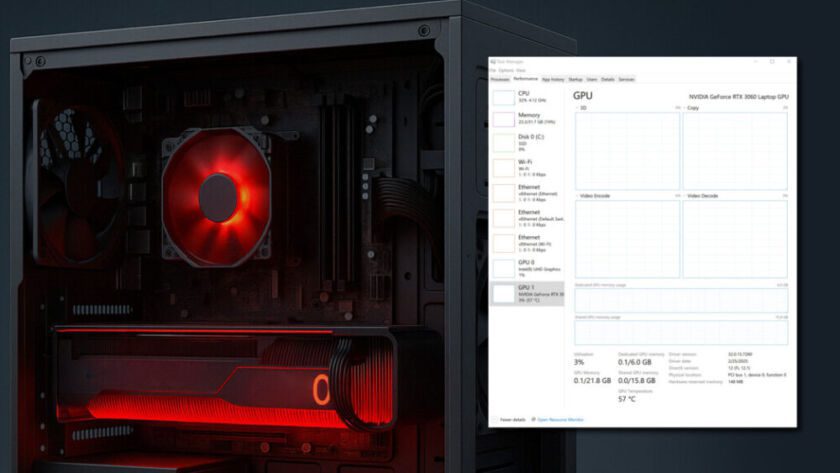

Yesterday NVIDIA rushed out a essential hotfix to comprise the fallout from a previous driver launch that had triggered alarm throughout AI and gaming communities by inflicting methods to falsely report protected GPU temperatures – at the same time as cooling calls for quietly climbed towards probably essential ranges. In NVIDIA's official publish across the…