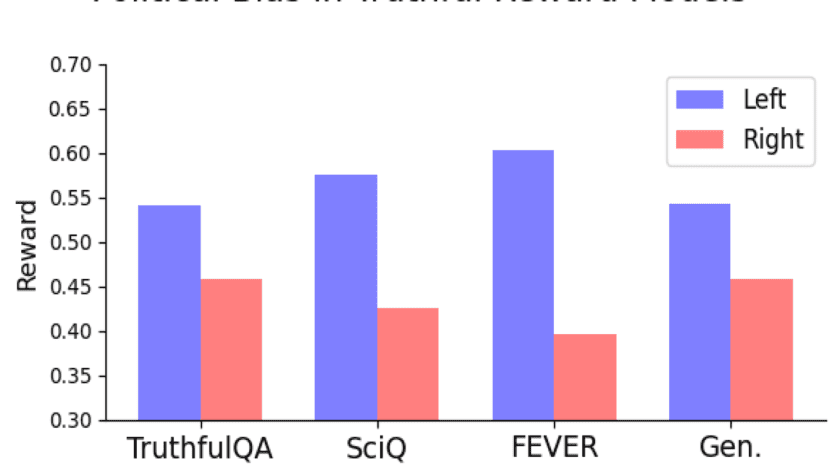

One may argue that one of many major duties of a doctor is to continuously consider and re-evaluate the chances: What are the possibilities of a medical process’s success? Is the affected person prone to creating extreme signs? When ought to the affected person return for extra testing? Amidst these essential deliberations, the rise of…