A brand new paper out this week at Arxiv addresses a difficulty which anybody who has adopted the Hunyuan Video or Wan 2.1 AI video mills could have come throughout by now: temporal aberrations, the place the generative course of tends to abruptly velocity up, conflate, omit, or in any other case mess up essential moments in a generated video:

Click on to play. A number of the temporal glitches which are turning into acquainted to customers of the brand new wave of generative video programs, highlighted within the new paper. To the precise, the ameliorating impact of the brand new FluxFlow method. Supply: https://haroldchen19.github.io/FluxFlow/

The video above options excerpts from instance take a look at movies on the (be warned: reasonably chaotic) mission website for the paper. We are able to see a number of more and more acquainted points being remediated by the authors’ methodology (pictured on the precise within the video), which is successfully a dataset preprocessing method relevant to any generative video structure.

Within the first instance, that includes ‘two kids enjoying with a ball’, generated by CogVideoX, we see (on the left within the compilation video above and within the particular instance under) that the native era quickly jumps via a number of important micro-movements, dashing the youngsters’s exercise as much as a ‘cartoon’ pitch. Against this, the identical dataset and methodology yield higher outcomes with the brand new preprocessing method, dubbed FluxFlow (to the precise of the picture in video under):

Click on to play.

Within the second instance (utilizing NOVA-0.6B) we see {that a} central movement involving a cat has indirectly been corrupted or considerably under-sampled on the coaching stage, to the purpose that the generative system turns into ‘paralyzed’ and is unable to make the topic transfer:

Click on to play.

This syndrome, the place the movement or topic will get ‘caught’, is likely one of the most frequently-reported bugbears of HV and Wan, within the numerous picture and video synthesis teams.

A few of these issues are associated to video captioning points within the supply dataset, which we took a have a look at this week; however the authors of the brand new work focus their efforts on the temporal qualities of the coaching knowledge as a substitute, and make a convincing argument that addressing the challenges from that perspective can yield helpful outcomes.

As talked about within the earlier article about video captioning, sure sports activities are significantly tough to distil into key moments, that means that crucial occasions (corresponding to a slam-dunk) don’t get the eye they want at coaching time:

Click on to play.

Within the above instance, the generative system doesn’t know how you can get to the following stage of motion, and transits illogically from one pose to the following, altering the perspective and geometry of the participant within the course of.

These are massive actions that bought misplaced in coaching – however equally weak are far smaller however pivotal actions, such because the flapping of a butterfly’s wings:

Click on to play.

Not like the slam-dunk, the flapping of the wings is just not a ‘uncommon’ however reasonably a persistent and monotonous occasion. Nevertheless, its consistency is misplaced within the sampling course of, for the reason that motion is so speedy that it is extremely tough to ascertain temporally.

These should not significantly new points, however they’re receiving better consideration now that highly effective generative video fashions can be found to fans for native set up and free era.

The communities at Reddit and Discord have initially handled these points as ‘user-related’. That is an comprehensible presumption, for the reason that programs in query are very new and minimally documented. Due to this fact numerous pundits have instructed numerous (and never at all times efficient) treatments for a number of the glitches documented right here, corresponding to altering the settings in numerous parts of numerous kinds of ComfyUI workflows for Hunyuan Video (HV) and Wan 2.1.

In some instances, reasonably than producing speedy movement, each HV and Wan will produce gradual movement. Ideas from Reddit and ChatGPT (which principally leverages Reddit) embody altering the variety of frames within the requested era, or radically reducing the body charge*.

That is all determined stuff; the rising fact is that we do not but know the precise trigger or the precise treatment for these points; clearly, tormenting the era settings to work round them (significantly when this degrades output high quality, as an example with a too-low fps charge) is just a short-stop, and it is good to see that the analysis scene is addressing rising points this shortly.

So, apart from this week’s have a look at how captioning impacts coaching, let’s check out the brand new paper about temporal regularization, and what enhancements it’d provide the present generative video scene.

The central concept is reasonably easy and slight, and none the more serious for that; nonetheless the paper is considerably padded to be able to attain the prescribed eight pages, and we are going to skip over this padding as obligatory.

The fish within the native era of the VideoCrafter framework is static, whereas the FluxFlow-altered model captures the requisite adjustments. Supply: https://arxiv.org/pdf/2503.15417

The brand new work is titled Temporal Regularization Makes Your Video Generator Stronger, and comes from eight researchers throughout Everlyn AI, Hong Kong College of Science and Know-how (HKUST), the College of Central Florida (UCF), and The College of Hong Kong (HKU).

(on the time of writing, there are some points with the paper’s accompanying mission website)

FluxFlow

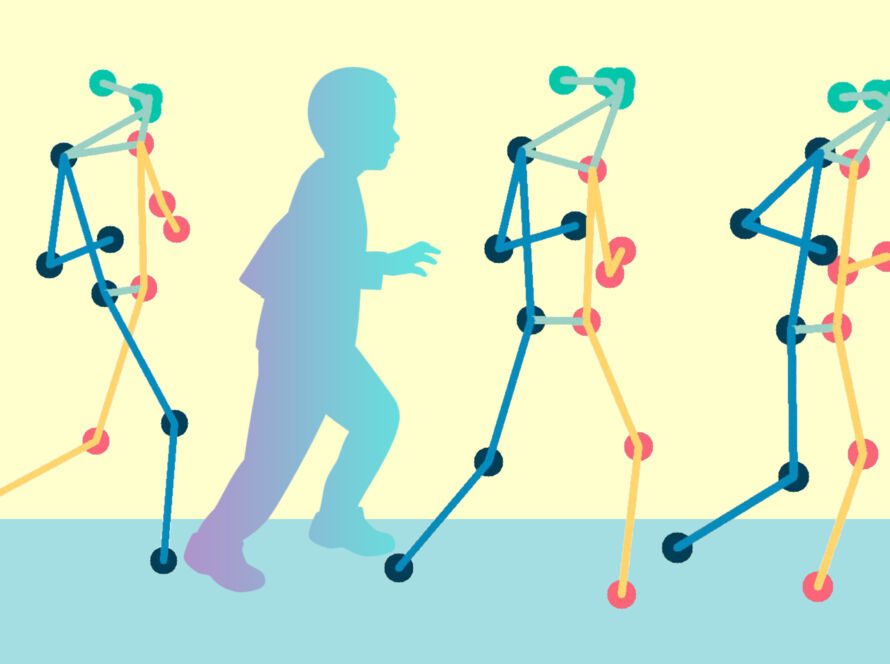

The central concept behind FluxFlow, the authors’ new pre-training schema, is to beat the widespread issues flickering and temporal inconsistency by shuffling blocks and teams of blocks within the temporal body orders because the supply knowledge is uncovered to the coaching course of:

The central concept behind FluxFlow is to maneuver blocks and teams of blocks into surprising and non-temporal positions, as a type of knowledge augmentation.

The paper explains:

‘[Artifacts] stem from a basic limitation: regardless of leveraging large-scale datasets, present fashions usually depend on simplified temporal patterns within the coaching knowledge (e.g., mounted strolling instructions or repetitive body transitions) reasonably than studying numerous and believable temporal dynamics.

‘This challenge is additional exacerbated by the dearth of specific temporal augmentation throughout coaching, leaving fashions susceptible to overfitting to spurious temporal correlations (e.g., “body #5 should comply with #4”) reasonably than generalizing throughout numerous movement situations.’

Most video era fashions, the authors clarify, nonetheless borrow too closely from picture synthesis, specializing in spatial constancy whereas largely ignoring the temporal axis. Although methods corresponding to cropping, flipping, and shade jittering have helped enhance static picture high quality, they aren’t enough options when utilized to movies, the place the phantasm of movement is dependent upon constant transitions throughout frames.

The ensuing issues embody flickering textures, jarring cuts between frames, and repetitive or overly simplistic movement patterns.

Click on to play.

The paper argues that although some fashions – together with Steady Video Diffusion and LlamaGen – compensate with more and more complicated architectures or engineered constraints, these come at a value when it comes to compute and adaptability.

Since temporal knowledge augmentation has already confirmed helpful in video understanding duties (in frameworks corresponding to FineCliper, SeFAR and SVFormer) it’s shocking, the authors assert, that this tactic isn’t utilized in a generative context.

Disruptive Habits

The researchers contend that easy, structured disruptions in temporal order throughout coaching assist fashions generalize higher to lifelike, numerous movement:

‘By coaching on disordered sequences, the generator learns to recuperate believable trajectories, successfully regularizing temporal entropy. FLUXFLOW bridges the hole between discriminative and generative temporal augmentation, providing a plug-and-play enhancement resolution for temporally believable video era whereas enhancing total [quality].

‘Not like present strategies that introduce architectural adjustments or depend on post-processing, FLUXFLOW operates straight on the knowledge stage, introducing managed temporal perturbations throughout coaching.’

Click on to play.

Body-level perturbations, the authors state, introduce fine-grained disruptions inside a sequence. This type of disruption is just not dissimilar to masking augmentation, the place sections of information are randomly blocked out, to stop the system overfitting on knowledge factors, and inspiring higher generalization.

Assessments

Although the central concept right here would not run to a full-length paper, as a consequence of its simplicity, nonetheless there’s a take a look at part that we will check out.

The authors examined for 4 queries referring to improved temporal high quality whereas sustaining spatial constancy; capacity to be taught movement/optical circulate dynamics; sustaining temporal high quality in extraterm era; and sensitivity to key hyperparameters.

The researchers utilized FluxFlow to a few generative architectures: U-Web-based, within the type of VideoCrafter2; DiT-based, within the type of CogVideoX-2B; and AR-based, within the type of NOVA-0.6B.

For truthful comparability, they fine-tuned the architectures’ base fashions with FluxFlow as a further coaching part, for one epoch, on the OpenVidHD-0.4M dataset.

The fashions had been evaluated towards two widespread benchmarks: UCF-101; and VBench.

For UCF, the Fréchet Video Distance (FVD) and Inception Rating (IS) metrics had been used. For VBench, the researchers focused on temporal high quality, frame-wise high quality, and total high quality.

Quantitative preliminary Analysis of FluxFlow-Body. “+ Authentic” signifies coaching with out FLUXFLOW, whereas “+ Num × 1” exhibits completely different FluxFlow-Body configurations. Finest outcomes are shaded; second-best are underlined for every mannequin.

Commenting on these outcomes, the authors state:

‘Each FLUXFLOW-FRAME and FLUXFLOW-BLOCK considerably enhance temporal high quality, as evidenced by the metrics in Tabs. 1, 2 (i.e., FVD, Topic, Flicker, Movement, and Dynamic) and qualitative ends in [image below].

‘As an example, the movement of the drifting automobile in VC2, the cat chasing its tail in NOVA, and the surfer using a wave in CVX turn out to be noticeably extra fluid with FLUXFLOW. Importantly, these temporal enhancements are achieved with out sacrificing spatial constancy, as evidenced by the sharp particulars of water splashes, smoke trails, and wave textures, together with spatial and total constancy metrics.’

Under we see alternatives from the qualitative outcomes the authors confer with (please see the unique paper for full outcomes and higher decision):

Alternatives from the qualitative outcomes.

The paper means that whereas each frame-level and block-level perturbations improve temporal high quality, frame-level strategies are likely to carry out higher. That is attributed to their finer granularity, which allows extra exact temporal changes. Block-level perturbations, against this, could introduce noise as a consequence of tightly coupled spatial and temporal patterns inside blocks, lowering their effectiveness.

Conclusion

This paper, together with the Bytedance-Tsinghua captioning collaboration launched this week, has made it clear to me that the obvious shortcomings within the new era of generative video fashions could not outcome from person error, institutional missteps, or funding limitations, however reasonably from a analysis focus that has understandably prioritized extra pressing challenges, corresponding to temporal coherence and consistency, over these lesser considerations.

Till just lately, the outcomes from freely-available and downloadable generative video programs had been so compromised that no nice locus of effort emerged from the fanatic neighborhood to redress the problems (not least as a result of the problems had been basic and never trivially solvable).

Now that we’re a lot nearer to the long-predicted age of purely AI-generated photorealistic video output, it is clear that each the analysis and informal communities are taking a deeper and extra productive curiosity in resolving remaining points; hopefully, these should not intractable obstacles.

* Wan’s native body charge is a paltry 16fps, and in response to my very own points, I notice that boards have instructed reducing the body charge as little as 12fps, after which utilizing FlowFrames or different AI-based re-flowing programs to interpolate the gaps between such a sparse variety of frames.

First revealed Friday, March 21, 2025