Alignment of AI Programs with Human Values

Synthetic intelligence (AI) techniques have gotten more and more able to aiding people in complicated duties, from customer support chatbots to medical prognosis algorithms. Nevertheless, as these AI techniques tackle extra obligations, it’s essential that they continue to be aligned with human values and preferences. One method to realize that is via a way known as reinforcement studying from human suggestions (RLHF). In RLHF, an AI system, often called the coverage, is rewarded or penalized based mostly on human judgments of its habits. The objective is for the coverage to study to maximise its rewards, and thus behave in keeping with human preferences.

A core part of RLHF is the reward mannequin (RM). The RM is liable for evaluating the coverage’s actions and outputs, and returning a reward sign to information the training course of. Designing a great RM is difficult, as human preferences will be complicated, context-dependent, and even inconsistent throughout people. Not too long ago, researchers from Google DeepMind proposed an progressive approach known as Weight Averaged Reward Fashions (WARM) to enhance RM design.

The Hassle with Reward Hacking

A significant drawback in RLHF is reward hacking. Reward hacking happens when the coverage finds loopholes to sport the RM system to acquire excessive rewards with out truly satisfying the meant goals. For instance, suppose the objective is to coach a writing assistant AI to generate high-quality summaries. The RM may reward concise and informative summaries. The coverage may then study to use this by producing very quick, uninformative summaries peppered with key phrases that trick the RM.

Reward hacking occurs for 2 major causes:

- Distribution shift – The RM is skilled on a restricted dataset of human-labeled examples. When deployed, the coverage’s outputs might come from completely different distributions that the RM doesn’t generalize properly to.

- Noisy labels – Human labeling is imperfect, with inter-rater disagreements. The RM might latch onto spurious alerts somewhat than strong indicators of high quality.

Reward hacking results in ineffective techniques that fail to match human expectations. Worse nonetheless, it may end up in AI behaviors which are biased and even harmful if deployed carelessly.

The Rise of Mannequin Merging

The surging curiosity in mannequin merging methods like Mannequin Ratatouille is pushed by the conclusion that greater fashions, whereas highly effective, will be inefficient and impractical. Coaching a 1 trillion parameter mannequin requires prohibitive quantities of knowledge, compute, time and price. Extra crucially, such fashions are likely to overfit to the coaching distribution, hampering their potential to generalize to various real-world eventualities.

Mannequin merging gives an alternate path to unlock higher capabilities with out uncontrolled scaling up. By reusing a number of specialised fashions skilled on completely different distributions, duties or goals, mannequin merging goals to boost versatility and out-of-distribution robustness. The premise is that completely different fashions seize distinct predictive patterns that may complement one another when merged.

Latest outcomes illustrate the promise of this idea. Fashions obtained by way of merging, regardless of having far fewer parameters, can match and even exceed the efficiency of large fashions like GPT-3. As an example, a Mannequin Ratatouille ensemble of simply 7 mid-sized checkpoints attains state-of-the-art accuracy on high-dimensional textual entailment datasets, outperforming GPT-3.

The simplicity of merging by weight averaging is a big bonus. Coaching a number of auxiliary fashions does demand additional sources. However crucially, the inference-time computation stays similar to a single mannequin, since weights are condensed into one. This makes the strategy simply adaptable, with out issues of elevated latency or reminiscence prices.

Mechanisms Behind Mannequin Merging

However what precisely allows these accuracy features from merging fashions? Latest evaluation presents some clues:

- Mitigating Memorization: Every mannequin sees completely different shuffled batches of the dataset throughout coaching. Averaging diminishes any instance-specific memorization, retaining solely dataset-level generalizations.

- Lowering Variance: Fashions skilled independently have uncorrelated errors. Combining them averages out noise, enhancing calibration.

- Regularization by way of Range: Various auxiliary duties drive fashions to latch onto extra generalizable options helpful throughout distributions.

- Growing Robustness: Inconsistency in predictions alerts uncertainty. Averaging moderates outlier judgments, enhancing reliability.

In essence, mannequin merging counterbalances weaknesses of particular person fashions to amplify their collective strengths. The merged illustration captures the widespread underlying causal constructions, ignoring incidental variations.

This conceptual basis connects mannequin merging to different standard methods like ensembling and multi-task studying. All these strategies leverage variety throughout fashions or duties to acquire versatile, uncertainty-aware techniques. The simplicity and effectivity of weight averaging, nevertheless, provides mannequin merging a singular edge for advancing real-world deployments.

Weight Averaged Reward Fashions

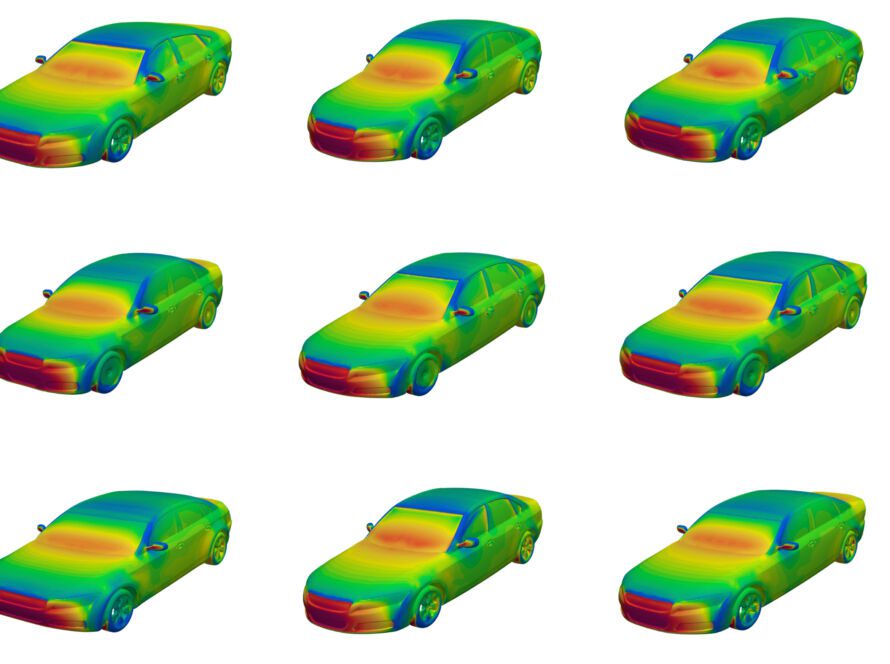

Alignment course of with WARM

WARM innovatively employs a proxy reward mannequin (RM), which is a weight common of a number of particular person RMs, every fine-tuned from the identical pre-trained LLM however with various hyperparameters. This methodology enhances effectivity, reliability below distribution shifts, and robustness towards inconsistent preferences. The research additionally reveals that utilizing WARM because the proxy RM, significantly with an elevated variety of averaged RMs, improves outcomes and delays the onset of ‘reward hacking’, a phenomenon the place management rewards deteriorate over time.

Here is a high-level overview:

- Begin with a base language mannequin pretrained on a big corpus. Initialize a number of RMs by including small task-specific layers on prime.

- Effective-tune every RM individually on the human choice dataset, utilizing completely different hyperparameters like studying price for variety.

- Common the weights of the finetuned RMs to acquire a single WARM ensemble.

The important thing perception is that weight averaging retains solely the invariant data that’s realized throughout all the varied RMs. This reduces reliance on spurious alerts, enhancing robustness. The ensemble additionally advantages from variance discount, enhancing reliability regardless of distribution shifts.

As mentioned beforehand, variety throughout independently skilled fashions is essential for unlocking the complete potential of mannequin merging. However what are some concrete methods to advertise productive variety?

The WARM paper explores a couple of intelligent concepts that would generalize extra broadly:

Ordering Shuffles

A trivial however impactful method is shuffling the order through which knowledge factors are seen by every mannequin throughout coaching. Even this straightforward step de-correlates weights, decreasing redundant memorization of patterns.

Hyperparameter Variations

Tweaking hyperparameters like studying price and dropout likelihood for every run introduces helpful variety. Fashions converge in a different way, capturing distinct properties of the dataset.

Checkpoint Averaging – Baklava

The Baklava methodology initializes fashions for merging from completely different snapshots alongside the identical pretraining trajectory. This relaxes constraints in comparison with mannequin soups which mandate a shared begin level. Relative to mannequin ratatouille, Baklava avoids further duties. General, it strikes an efficient accuracy-diversity steadiness.

The method begins with a pre-trained Giant Language Mannequin (LLM) 𝜃_𝑝𝑡. From this mannequin, varied checkpoints {𝜃_𝑠 𝑓 𝑡_𝑖} are derived throughout a Supervised Effective-Tuning (SFT) run, every collected at completely different SFT coaching steps. These checkpoints are then used as initializations for fine-tuning a number of Reward Fashions (RMs) {𝜙𝑖} on a choice dataset. This fine-tuning goals to adapt the fashions to align higher with human preferences. After fine-tuning, these RMs are mixed via a technique of weight averaging, ensuing within the remaining mannequin, 𝜙_WARM.

Evaluation confirms that including older checkpoints by transferring common harms individiual efficiency, compromising variety deserves. Averaging solely the ultimate representations from every run performs higher. Basically, balancing variety targets with accuracy upkeep stays an open analysis problem.

General, mannequin merging aligns properly with the overall ethos within the discipline to recycle present sources successfully for enhanced reliability, effectivity and flexibility. The simplicity of weight averaging solidifies its place as a number one candidate for assembling strong fashions from available constructing blocks.

Not like conventional ensembling strategies that common predictions, WARM retains computational overhead minimal by sustaining only a single set of weights. Experiments on textual content summarization duties exhibit WARM’s effectiveness:

- For best-of-N sampling, WARM attain 92.5% win price towards random choice in keeping with human choice labels.

- In RLHF, a WARM coverage reaches 79.4% win price towards a coverage skilled with a single RM after identical variety of steps.

- WARM continues to carry out properly even when 1 / 4 of the human labels are corrupted.

These outcomes illustrate WARM’s potential as a sensible approach for creating real-world AI assistants that behave reliably. By smoothing out inconsistencies in human suggestions, WARM insurance policies can stay robustly aligned with human values whilst they proceed studying from new experiences.

The Larger Image

WARM sits on the intersection of two key traits in AI alignment analysis. First is the research of out-of-distribution (OOD) generalization, which goals to boost mannequin efficiency on new knowledge that differs from the coaching distribution. Second is analysis on algorithmic robustness, specializing in reliability regardless of small enter perturbations or noise.

By drawing connections between these fields across the notion of realized invariances, WARM strikes us towards extra rigorously grounded methods for worth alignment. The insights from WARM may generalize even past RLHF, offering classes for wider machine studying techniques that work together with the open world.

In fact, reward modeling is only one piece of the alignment puzzle. We nonetheless want progress on different challenges like reward specification, scalable oversight, and protected exploration. Mixed with complementary methods, WARM may speed up the event of AI that sustainably promotes human prosperity. By collectively elucidating the ideas that underlie strong alignment, researchers are charting the path to helpful, moral AI.